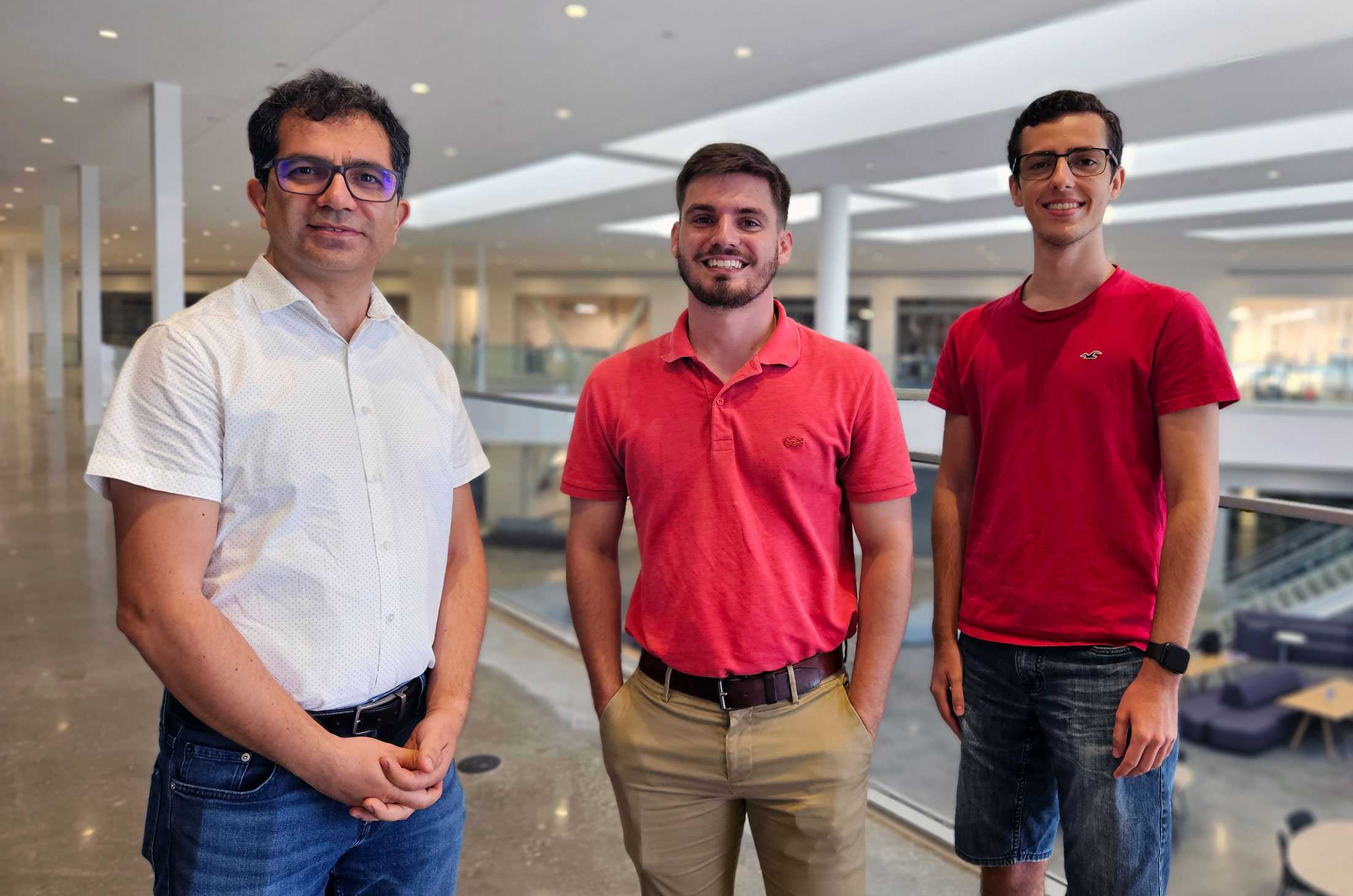

Dr. Oguzhan Topsakal, assistant professor of computer science at Florida Polytechnic University (left), and computer science seniors Jackson Harper and Colby Edell, completed a research project this summer creating a benchmarking tool to measure the reasoning and planning abilities of large language models like ChatGPT.

Dr. Oguzhan Topsakal, assistant professor of computer science at Florida Polytechnic University, and two undergraduate research assistants have created a pioneering benchmarking tool to evaluate the capabilities of artificial intelligence models like ChatGPT and Gemini.

The tool uses simple games like tic tac toe to assess the strategic reasoning and planning abilities of these large language models (LLMs), which include ChatGPT models by OpenAI, Claude models by Anthropic, Gemini models by Google, and Llama models by Meta.

The study revealed that LLMs struggle with simple game strategies.

“After you see LLMs do so many things, you would think they would do such a simple task very easily, but we have seen they are not really capable of playing these simple games so easily,” Topsakal said. “My 9-year-old son saw it playing on my screen and said, ‘It is not playing well, I can do better.’

“We expect LLMs to perform well because they are versatile and capable of correcting, suggesting or generating text. However, we've observed that they are not as strong in reasoning intelligently.”

The effort began as a class project last fall and evolved into a comprehensive research study.

“Jackson (Harper) and his in-class team worked on preparing a mobile app for playing tic tac toe with large language models and at the end of their class, I proposed to them if they wanted to continue and develop a research study,” Topsakal said. “We enhanced the project and made different kinds of LLMs compete against each other.”

Topsakal and Harper, a senior computer science major with a cybersecurity concentration, published a paper about their findings in the journal Electronics in April. The research led to the development of the online benchmarking tool created this summer with a grant from Florida Poly. The tool allows different LLMs to compete against one another in simple games like tic tac toe, four in a row and gomoku.

They took up the research along with Colby Edell, a senior computer science major with a concentration in software engineering, who made significant contributions to the development of the web-based game simulation software and benchmark.

“There was no benchmarking tool that made these LLMs compete with each other," Topsakal said. "This competition between the models, I thought, would be a good, interesting contribution to the research.”

The research has implications for the development of Artificial General Intelligence (AGI), he added.

“We all think AGI is coming soon because of these advances in LLMs,” he said. “AGI is when AI is capable of doing the vast majority of tasks at a human level, but we see that it is not there yet. When it happens, we need to have some tools to measure these capabilities, and this benchmarking tool will help.”

As AI technology continues to evolve, this benchmarking tool could provide insight into the progress toward AGI.

“This is something that can be used 10 years from now when we get closer to AGI and we see the stats rise and improve through the years,” Harper said. “The games may be simple, but we’ll see how AI can adapt to the games.”

The team made its source code available on the developer platform GitHub, allowing other researchers to contribute to the project.

"Anyone who wants to give it a try can download the code and try it," Topsakal said. "They can also submit results for new LLMs as they emerge."

The research team published the pre-print of the study at arXiv, an open access archive, and submitted the study to a leading journal in the field for consideration. When published, it will mark an important step in evaluating the capabilities of large language models.

Contact:

Lydia Guzmán

Director of Communications

863-874-8557